Share this post

On the Ellii platform, you'll find thousands of relevant lessons and several types of digital tasks where English learners can practice different skills, including reading, writing, speaking, and listening.

If you've ever assigned your students a digital task through Ellii, then you may have already noticed that many digital task types get auto-corrected by the platform. That's because there's only one correct answer (e.g., fill in the blanks, multiple-choice, true or false).

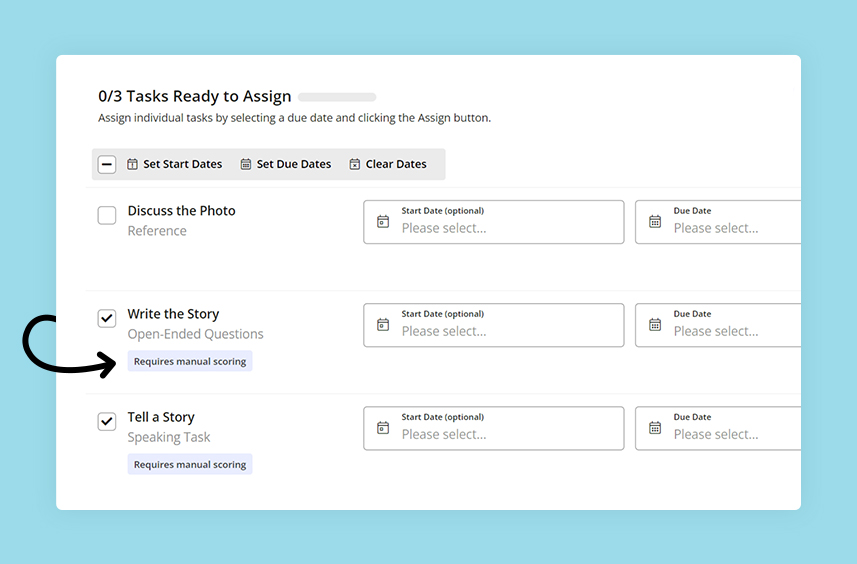

However, there are a few task types that require manual scoring (e.g., writing and speaking), which can be time-consuming for teachers to grade.

This means that when you assign a full lesson with multiple tasks, including open-ended speaking and writing tasks, chances are you're spending more time grading assignments than you'd like to.

Realizing this has led us to ask ourselves the following question:

How can we make this easier on teachers and reduce grading time?

Triggered by this question, the developers kick-started their mission to figure out a way to make some manually scored tasks auto-scorable, paving the way for Ellii's new Auto-Scoring (AI) feature.

Here's a behind-the-scenes look at how we developed Ellii's Auto-Scoring (AI) feature:

Phase 1: Research

We started by talking to the Publishing team about how these manually scored questions are created in digital lessons and how they work internally.

We identified two types of written responses:

- Opinion questions (or open-ended questions)

- Example: Do you like pizza?

- Correct/incorrect questions (or closed questions)

- Example: What is the man in the video doing?

Every month, students answer nearly half a million questions in writing. More than 80% of those are closed questions.

Since written responses are the most assigned task and take the most time to grade, it made sense for our team to start thinking about getting these auto-scored first. As for grammar, vocabulary, and spelling tasks, we will consider auto-scoring for them in the future.

After doing extensive research on written responses, our team realized we could use artificial intelligence (AI) to match the student's answer to the correct answer (i.e., the suggested answer that shows up on the platform).

There’s a common term in the AI world known as Natural Language Processing (NLP).

Wikipedia defines NLP as follows:

". . . interactions between computers and human language, in particular how to program computers to process and analyze large amounts of natural language data. The goal is a computer capable of "understanding" the contents of documents, including the contextual nuances of the language within them."

In short, we decided to use NLP to semantically match the student's answer to the correct answer. In other words, the NLP is able to gauge the meaning behind the student's response against the provided answer without having to match it word for word.

Here’s an example of what a semantic match looks like:

Phase 2: Analysis

Up until this point, our research was all theory. We now needed to put it into practice.

The first thing we did was take a small sample of different types of written responses: complex ones, simpler ones, and ones where the answer is a list of items. Then we took random student answers for those sample questions and began analyzing them.

We ran multiple NLP algorithms to test them. Our developers compared student answers to the correct answer and took notes on the score the AI was assigning.

We manually analyzed all the answers one by one to see which algorithms made sense. Since we're developers and not teachers, we also took into account the score the teacher gave the student for a particular question.

In doing so, we quickly noticed that one of the algorithms was looking very promising.

Let's see it in action! The following examples show how writing tasks are scored by AI vs. a teacher:

Question 1

What happened in the boss’s office?

Ellii's suggested answer

He tripped over a phone cord and fell in the boss’s office.

You'll notice that even if the student's answer doesn’t fully match the Publishing team's suggested answer, the AI does consider the meaning behind the sentence and sets a score accordingly.

Question 2

Why does the reviewer mention food labels? Use the word "disclose" in your answer.

Ellii's suggested answer

The reviewer compares how app makers have to disclose what data is shared in their apps, just like how food sellers have to disclose information about what's in the food. Later he says that in their privacy label requirements, Apple doesn't ask app developers to say who they share data with. The reviewer says this is like having a nutrition label without listing the ingredients. He sums up by saying that we deserve an honest account of what's in both our food and our apps.

This is a very complex question and answer. You'll notice the NLP service was able to compare the semantics of the student's response against the suggested answer.

Question 3

How does the reading end?

Ellii's suggested answer

The reading ends with a question worth pondering. Since it is the migrant who has to take the action and send the money home, what happens if he/she decides to cut the family off? Do his/her dependents have any way to coerce the migrant to continue funding the family back home? Imagine what happens when a migrant remarries in a foreign country. Will his/her new family agree to share the family income?

Phase 3: Closed beta

The results were very promising at this point, but we were not 100% confident about how useful this feature would be and how good the AI scoring was.

So we decided to do a closed beta test. This meant selecting a handful of Ellii teachers and inviting them to test the feature.

We ran the closed beta in May 2022, and teachers were very happy with it according to the feedback we received from our beta test survey.

Here are some notable statistics:

- 4,340 questions were answered and auto-scored during the month of May 2022

- Teachers changed the AI score in only 3.2% of questions

- 66% of surveyed teachers said they would love to have this feature be permanent

- 33% said they don’t have a strong opinion on whether AI was useful or not

After thoroughly analyzing the results, we noticed a few areas where we could improve the AI to provide teachers and their students with more accurate scoring.

We worked on these improvements, and now we're ready for the next phase.

Phase 4: Open beta

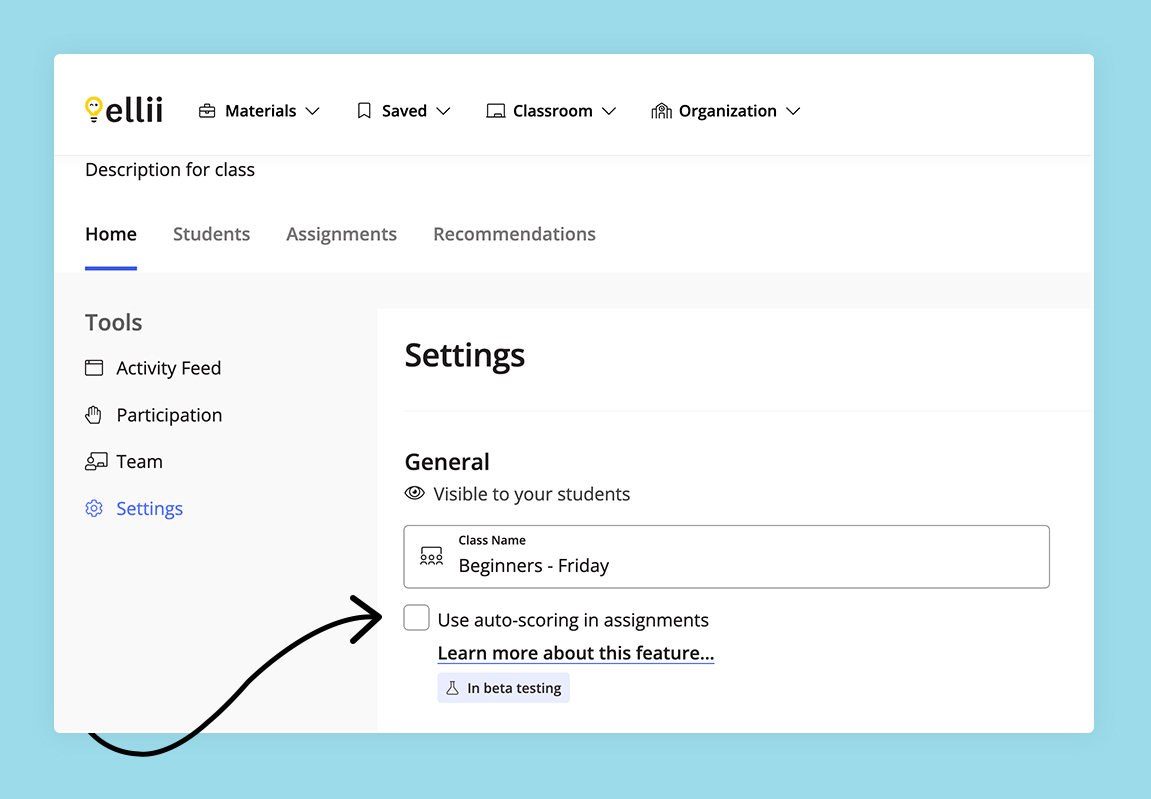

As of now, we're officially doing an open beta test of the Auto-Scoring (AI) feature. This means that Ellii teachers will be able to opt in to use this feature in their classes.

You'll find the auto-scoring opt-in checkbox when you create your class and edit it. Note that you need to opt in for each class and assign to students within a class in order for the AI to work.

Have you tried Ellii's Auto-Scoring (AI) feature yet?

Share your feedback with us in the comments! Let us know what you loved and what you think needs improvement so that we can continue to make grading easier for you.

Comments

There are no comments on this post. Start the conversation!